Dallas rheumatologist Richard Stern, MD, has long been an early adopter of new technologies, dating back to his first computer in the early 1980s.

Recently, he began experimenting with artificial intelligence (AI). He uses ChatGPT to draft patient handouts about the various autoimmune diseases he treats, write appeals letters to payers that have rejected his prior authorization requests, and respond to patient portal messages. And he has found it very effective, saving him time and hassles. (See “Medicine Meets AI,” page 15.)

So, he wonders how payers are using AI – and suspects their applications are much more advanced.

“Payers have been using this for probably decades,” he told Texas Medicine. “It’s becoming more and more sophisticated. But until [ChatGPT] let the genie out of the bottle for the average person to use an AI program, it wasn’t anything we had access to.”

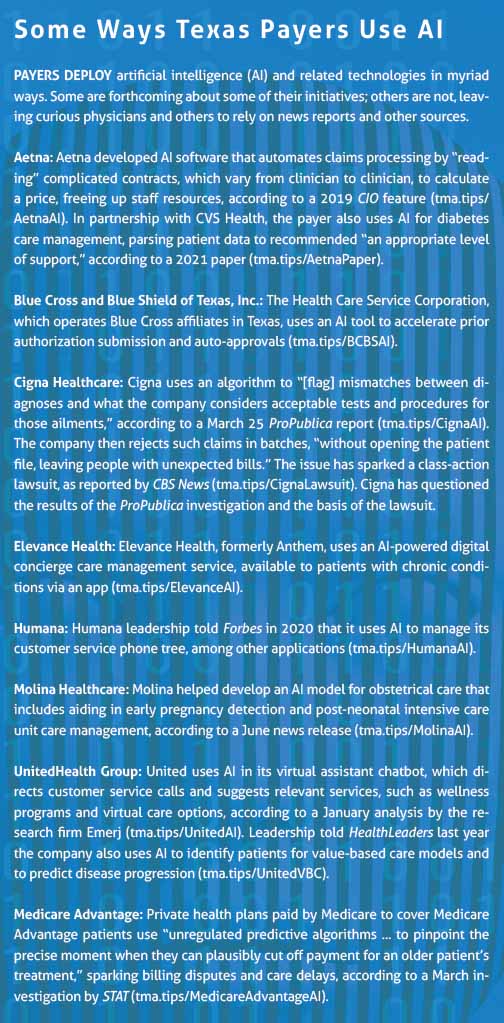

Although physicians increasingly are using AI themselves, they say they often lack access to models that are customized to their needs. Payers, on the other hand, have the resources to develop products that, for instance, process prior authorization requests – delivering near-instantaneous approvals where certain criteria are met – and enable value-based payment models, among others. Those products also employ algorithms that appear to systematically deny coverage and leave patients with surprise medical bills. (See “Some Ways Texas Payers Use AI,” right.)

Physicians, citing these findings and others, are worried about how payers’ AI products affect their patients and their practices. So, they’re seeking transparency.

Payers’ use of AI is “all kind of under this hidden hood, and we’re not allowed to look under it,” said Manish Naik, MD, chair of the Texas Medical Association’s Committee on Health Information Technology.

Based on the committee’s work, TMA’s House of Delegates voted at TexMed 2022 to adopt principles guiding the use of augmented intelligence in health care, saying such tools should “be developed transparently.” The policy specifically uses the term “augmented intelligence,” rather than AI, reflecting TMA’s belief that such technology “should be used to augment care” and should “co-exist with human decision-making.”

Similarly, the American Medical Association House of Delegates in June directed the association “to advocate greater regulatory oversight of the use of [AI] for review of patient claims and prior authorization requests, including whether insurers are using a thorough and fair process.”

Meanwhile, TMA leadership and staff are in the process of meeting with each of the five major Texas payers to discuss physician concerns, including the growing use of AI in health care.

“The playing field is not level,” Dr. Stern said.

The value of transparency

Ajay Gupta, MD, chair of TMA’s Council on Health Care Quality and chief medical officer of a large national payer, says transparency around new technologies benefits payers and physicians alike.

“By being transparent about it, we’ll get better buy-in” from physicians and health care professionals, the Austin family physician told Texas Medicine.

In response to physician feedback, his company is developing an AI tool to automate point-of-care prior authorization requests and approvals while reserving denials for its existing human-driven review process.

“The payers have really heard the issue that [physicians] are talking about when it comes to the prior authorization process,” Dr. Gupta said.

The tool also could help his company achieve several of its goals, including reducing the number of unnecessary appeals and denials, which Dr. Gupta says require “lots of manpower” to process; easing its relationship with physicians and health care professionals; and ensuring patients get the care they need in a timely fashion.

“The goal should be appropriate approvals and appropriate denials,” he said.

Other payer representatives Texas Medicine reached out to were unable to interview for this story.

In company reports, Aetna and UnitedHealthcare, for instance, acknowledge the potential of AI in health care.

While the technology isn’t new, Aetna says health care companies’ adoption of it is new. Calling AI “disruptive and transformative,” the payer says it “is unlocking the potential of these tools to help support better clinical and economic outcomes in treating chronic conditions” and value-based care.

UnitedHealth Group, the parent company of UnitedHealthcare, similarly touts AI’s potential to empower patients, physicians, and the overall health care industry. “As these solutions continue to evolve … we are committed to assessing and improving our [AI and machine learning] practices continuously, based on our own learnings, industry best practices, and regulatory guidance,” the payer wrote.

Growing physician awareness

Bryan Vartabedian, MD, chief medical officer of Texas Children’s Hospital in Austin, says payers and AI companies will have to earn physicians’ trust in such initiatives.

“Up until now, technology, categorically, I would say, has gotten in the way of the relationship between the doctor and the patient,” he said, mentioning exam room computers and monitors.

Dr. Vartabedian, who writes the 33 charts newsletter about medicine, technology, and culture, is more optimistic about AI tools like ChatGPT. But he expects many physicians will be skeptical, given the broken promises of earlier technological advances that only contributed to their administrative burden.

“The challenge for these companies is really proving that their technology is going to improve how they deliver care,” he said.

Physicians, he adds, should demand such proof.

“These are truly redefining moments in our profession,” Dr. Vartabedian said. “We need to play a more active role in deciding how these tools are used.”

Dr. Naik expects many payers are using AI-powered programs to process prior authorization requests. He thinks it’s a good idea but laments a general lack of communication beyond broad company statements.

“We don’t have as much transparency into the tools that they’re using, and even sometimes when we’ve asked questions … we’re told that it’s proprietary technology,” the internist and chief medical information officer at Austin Regional Clinic said.

If payers shared with physicians the criteria against which they assess prior authorization requests, for example, Dr. Naik believes the open communication could help cut down on unnecessary denials and appeals. Physicians also could use that information to develop AI tools of their own to automate their requests, multiplying the time-savings.

Instead, Dr. Naik says he and his physician colleagues often see prior authorization requests denied – whether by a human or an AI tool – for necessary services and medications.

Dr. Naik also worries about the limitations of AI technology, which he said “is only as good as the input and how it was designed and structured, which, at the end of the day, was done by a human.”

For instance, an October 2019 study in Science found that an algorithm widely used by health systems to identify patients with complex health needs who would benefit from care management programs was significantly biased against Black patients.

“At a given risk score, Black patients are considerably sicker than White patients,” the authors wrote. “The bias arises because the algorithm predicts health care costs rather than illness, but unequal access to care means that we spend less money caring for Black patients than for White patients.”

Given studies like this one, the authors of an April 2020 article in the Bulletin of the World Health Organization argued for “a robust ethical and regulatory environment” around payers’ use of AI.

Their recommendations echo TMA’s own augmented-intelligence policy, which calls on sellers and distributors of augmented intelligence to, among other things:

- Disclose they have met all legal and regulatory requirements;

- Share with customers and users any known inherent risks associated with their product;

- Provide users with clear guidelines for reporting anomalies; and

- Provide customers and users with adequate training and reference materials.

Still, Dr. Naik welcomes AI’s rising profile, which he says is sparking physicians’ awareness of the technology as well as their curiosity about its health care applications.

“Starting the discussion is helpful,” he said. “We don’t get to a better place until we start talking about it.”