“Prepare Yourselves, Robots Will Soon Replace Doctors in Healthcare,” screamed the headline in a 2017 Forbes magazine article. Media coverage like that makes it easy to see why artificial intelligence (AI) sounds like scary science fiction to some physicians.

For Jeremy Gabrysch, MD, an Austin emergency physician, the reality of AI is a great deal more pedestrian – and more helpful – for both physicians and patients.

“Basically, when people think of AI in medicine a lot of times their mind just goes to doctors being replaced by robots, and that’s just not what the promise of AI in medicine is,” said Dr. Gabrysch, who runs Remedy, a company that helps people use their cellphones to connect with medical services.

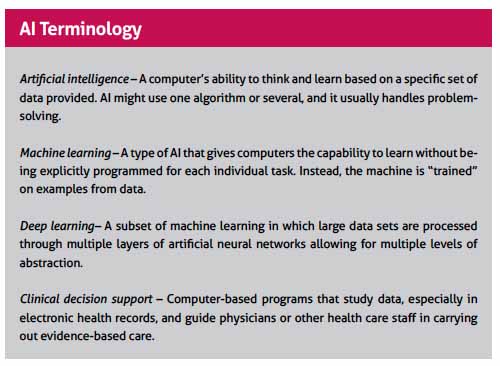

Generally defined, AI involves using computer-run algorithms and logic to do things human brains cannot do alone. AI also learns from the data it receives and makes predictions. (See “AI Terminology,” page 34.)

AI works for Remedy by routing patients to the most efficient setting for care – a video visit, in-person clinic visit, house call, or the emergency room, based on their demographics and the symptoms they’re experiencing, Dr. Gabrysch says.

For now, Remedy’s AI is basically a logistics coordinator. But AI can do a great deal more than match up patients with the best place of care. In recent years, it’s been used in the U.S. to scan X-rays for features human eyes may not detect, screen women for cervical cancer, and predict which patients are most at risk for readmission to a hospital.

Advocates for AI, like Dr. Gabrysch, see it as a valuable tool on many levels. Not only will it not replace physicians, they say, it will help doctors extend their reach and ease the nationwide physician shortage. Many believe this could improve access to medical care for all patients, but especially low-income ones, and boost their level of care.

“Physicians and care extenders [using AI] are going to be able to be more fully engaged in providing the human components of medicine that are so important because AI is going to do the heavy lifting of all the things that computers do better than we do,” Dr. Gabrysch says.

Most AI projects are still in development, not yet in clinical settings. But a 2018 survey of 200 U.S. health care leaders done by Intel and Convergys Analytics found that 54 percent of respondents expect widespread adoption of AI in medicine by 2023.

However, those same respondents also saw a lack of trust for AI among 30% of physicians and 36% of patients.

“A lot of the physicians I talk to fall into two categories,” said Joshua Chang, MD, who heads up a research program that develops medicine-related AI algorithms at The University of Texas Dell Medical School in Austin. “Some of them are concerned that AI systems cannot be trusted as it is difficult to explain how they arrive at certain decisions. Others are quite excited about AI applications without really understanding what it can or cannot do.”

That inexperience with AI breeds fear of the unknown, says Joe Cunningham, MD, an internal medicine specialist who invests in medical technology firms through his Austin company Santé Ventures. AI clearly is going to change medicine, he says, but it’s not clear to anyone exactly how or when that’s going to happen.

“People overestimate what’s going to happen in the next one to two years, and underestimate what’s going to happen in the next five to 10 years,” said Dr. Cunningham, a former member of the Texas Medical Association Board of Trustees.

Getting to know AI

Most versions of AI are computer software, not independent machines, and experts say many physicians already use AI as part of the electronic medical records.

Nevertheless, AI has not assumed the prominent role it’s taken in other industries and professions. For instance, Facebook uses machine learning to suggest friends to tag in photos; Netflix uses it to predict what movies viewers like; automakers use it to build self-driving cars; and the financial industry uses it to approve loans. Meanwhile, the military uses deep learning, a more advanced subset of machine learning, to identify areas that are safe or unsafe for troops.

Adoption of AI in health care has been slower in part because dealing with patients in a medical setting can’t be boiled down to algorithms as easily as other activities can be, says internist C. Martin Harris, MD, chief business officer and associate vice president for health enterprise at Dell Medical. Medicine also requires human judgment, he says.

Medical AI has gone through several iterations already, the first of which was clinical decision support – being able to guide medical decisions around a relatively established data set, Dr. Harris says.

“The classic example of that would simply be the idea that we are looking at a series of clinical results – a blood test, a blood pressure result, a weight result – and drawing a conclusion about the need for treatment. It’s rudimentary artificial intelligence,” Dr. Harris said.

As AI has evolved, it’s moved toward helping physicians understand patients in the context of their own lives, not just what the physician sees in a clinic, Dr. Harris says. For instance, it could anticipate patients’ medical needs by tracking factors such as their eating habits, living situation, and medical history, as well as relevant societal factors such as the local disease or crime rate. This approach would generate some very significant privacy concerns, but it could let both the patient and the physician know it might be time for an appointment – or more urgent treatment, Dr. Harris says.

Another important application – and the one that goes to the heart of physician anxiety about AI – is that AI can use information about the patient as well as data on medical studies and other information to diagnose illnesses or recommend treatment, Dr. Harris says. Radiology is seen as one of the most fertile grounds for medical AI because the visual nature of the specialty is suited to study by AI algorithms.

“This is really an application of AI that’s going to increase our ability to detect even small variances that might not be caught by the human eye and facilitate the radiologist, in this case, and their ability to do much more because they can focus on the more difficult kinds of diagnoses where their skill and experience is really necessary.”

Is this type of AI going to put human physicians out of business? Dr. Cunningham says it probably won’t even end the nationwide shortage of physicians. However, health care professionals will have do what they’ve always done: adjust to new technologies.

“If you are good at learning, then profound change is an advantage to you because you learn and adapt,” he said. “If you don’t continue to learn, then you’re really well equipped to function in a world that no longer exists.”

New directions

A lot about AI remains unclear – even the rules that will govern it. In April 2019, the U.S. Food and Drug Administration announced steps to “consider a new regulatory framework specifically tailored to promote the development of safe and effective medical devices and use advanced artificial intelligence algorithms.”

It’s also unclear how prevalent AI is in physicians’ offices or how it’s being used. But a large majority of the health care leaders who responded to the Intel-Convergys Analytics survey – 77% – said clinical uses outpace operational (41%) and financial (26%) applications.

A taste of the future of medical AI can be seen at Dell Medical, where Dr. Chang’s research program currently focuses on three AI projects.

One uses emergency medical technician data and demographic data to help better diagnose the severity of a patients’ strokes after they call 911 and then route them to the local hospital that best suits their needs.

Another uses data on infants born prematurely, who are prone to suddenly stop breathing, to anticipate that problem and identify which infants might be at the highest risk.

A third uses data to find the best use of electrostimulation, providing individualized care for patients with chronic illnesses like Parkinson’s or epilepsy.

In each case, the AI system must process and learn from a mountain of data. For instance, for a stroke AI must process the reports from EMTs, comments from caretakers, physical signs like muscle strength in the patient, and perhaps even demographic information.

“We’re going to take all those signals and train a computer to say: ‘Given all these data points, is the patient likely to benefit from endovascular therapy?’” Dr. Chang said. “To a degree, this is what a lot of clinicians do subconsciously. … So what we’re doing is training the AI to mimic the clinician in that regard. But what a computer does really well is that it can [analyze a lot of data on] a lot of patients very quickly.”

What his research program is doing may not be as unusual as how it’s doing it, Dr. Chang says. While there’s a lot of interest in medical AI, most of it comes from large engineering and technical universities, not medical schools, he says.

“What’s cool is that a lot of the research [in this program] is being spearheaded from the medical side as opposed to an engineering department,” he said. “Because we’re coming from a medical perspective, we not only have access to a lot of the data necessary, but we can evaluate it in context of patient care and clinical practice.”

That’s vital because AI based on bad or incomplete data can cause problems. In 2018, a team from Germany, France, and the U.S. introduced a deep learning machine that was trained to catch melanomas more accurately than dermatologists. The study announcing this finding made headlines in the international press.

But there was a problem, says Adewole Adamson, MD, a dermatologist and professor of internal medicine at Dell Medical. The database the machine used was extensive – containing more than 100,000 moles and melanomas – but it had a glaring omission.

“There were almost zero moles or melanomas from people of color,” he said. “And they definitely didn’t have them on the palms and the soles, which are the primary place where folks of color get melanoma.”

Dr. Adamson co-wrote a commentary in the November 2018 issue of JAMA Dermatology laying out the problem. The team behind the original study is looking to correct the deficiencies, but the controversy highlighted what is widely acknowledged as the technology’s biggest weakness – AI is only as good as its data.

“You’re building these databases that are biased from the jump,” he said. “You’re either going to have to put a warning label on them that says, ‘This may not be usable on all skin types,’ or you’re going to have to design stuff with equity in mind.”

Dr. Adamson has not used AI directly to treat patients. However, that’s likely to change soon. As part of his role as a dermatologist and researcher with a specialty in melanoma, he recently acquired a device designed to help triage atypical moles using AI technology. He plans to get to know the device and phase it in slowly.

“Clinicians need to be aware that this is not going to suddenly alter practice,” he said. “There are going to have to be a lot of different steps that have to happen from a safety point of view before [AI] can be ready for prime time.”

At the same time, he believes AI holds a lot of promise.

“I want it to succeed,” he said. “And I think we need to embrace it and help shape it so that it can actually be useful for us as clinicians and also help our patients.”

Tex Med. 2019;115(10):32-35

October 2019

Texas Medicine Contents Texas Medicine Main Page